Industrieautomation braucht optimale Augen: 3D-Kameras mit Time-of-Flight-Technologie (3D-ToF). In einem bereichsübergreifenden Projekt hat SICK zum richtigen Zeitpunkt gleich zwei solcher Vision-Systeme auf den Markt gebracht, von denen jedes in seinem Feld Maßstäbe setzt.

Gemeinsam mehr im Blick: 3D-Vision-Kameras mit Time-of-Flight-Technologie

Action in der Industrie-4.0-Produktion: 12 Pakete korrekt auf der Palette: Check, zwölf Pakete korrekt auf der Palette: Check, zwölf Pakete korrekt auf der Palette: Check – die Kommissionierung läuft wie am Schnürchen, ein Fall für blau. Währenddessen holt ein mobiler Roboter Palettennachschub und ein Mitarbeiter kommt ihm auf dem Weg durch die Werkshalle etwas nahe, er verlangsamt direkt seine Fahrt – ein Fall für gelb.

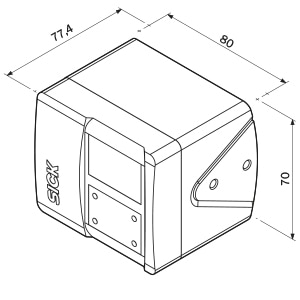

Blau steht für höchste Automatisierungsanforderungen oder die Produktlinie Visionary-T Mini, die erste Linie, bei deren 3D-ToF-Technologie SICK mit Microsoft kooperiert. Und gelb steht für höchste Sicherheitsanforderungen oder die Kamera safeVisionary2, die auf den ersten Blick außer der Farbe der Mini fast gleicht, aber als erste 3D-ToF-Kamera weltweit die hohe Sicherheitszertifizierung Performance Level c nach EN 13849 erreicht hat. Beide sind sogenannte 3D-Snapshot Kameras, die Industriekunden unterschiedlichster Branchen nutzen.

Schwerpunkt Programmierbarkeit oder Sicherheit

Das Besondere an unserer blauen Kamera ist nicht nur ihre Kompaktheit, Robustheit und ihre hervorragende Datenqualität, sondern auch ihre Programmierbarkeit. Durch dieses sogenannte Edge Computing lassen sich Apps direkt im Sensor programmieren. Je nachdem, welche Anwendung mit dem Sensor gelöst werden soll, kann der Kunde die dazu passende Software selbst auswählen, Software-defined Sensing eben. Dazu bringt das Gerät außer der Kamera auch gleich die Rechnerkapazitäten mit; das macht es so vielseitig einsetzbar“, betont Dr. Anatoly Sherman, Head of 3D Snapshot, Product Management & Applications Engineering.

Bei der gelben safeVisionary2 steckt der Hauptvorzug dagegen bereits in ihrem Produktnamen: Sicherheit. Das bedeutet vor allem Kollisionsschutz bei mobilen Robotern, ein 3D-Schutzfeld für kollaborative Roboter oder die Absturzsicherung für Serviceroboter. „Dafür sind unsere gelben Kameras zertifiziert, in diesem Fall vom TÜV. Also inklusive der integrierten Software. Die befindet sich hier schon im Gerät, da es sich bei der safeVisionary2 um ein Sicherheitsprodukt handelt. Der Anwender passt lediglich einige integrations- und applikationsbedingte Parameter an und kann die Kamera dann direkt in Betrieb nehmen“, erklärt Torsten Rapp, Head of Business Unit Autonomous Safety, zu den unterschiedlichen Anwendungsfeldern der beiden Kamerasensoren.

Neben der Technik macht aber noch etwas anderes diese Kameras besonders: die erstmalige bereichsübergreifende Zusammenarbeit bei ihrer Entwicklung.

„Unsere Kunden schauen ständig auf ihre Produktivität und wollen immer schneller kostengünstige Lösungen mit möglichst hohem Zusatznutzen“, sagt Sherman. „Für uns der richtige Zeitpunkt, unsere interne Zusammenarbeit deutlich auszubauen und das erste Mal von Anfang an in einem Projekt eine besonders anpassungsfähige programmierbare ‚blaue‘ und eine auf Sicherheit ausgerichtete ‚gelbe‘ Kamera zu entwickeln.“ Dabei brachte das blaue Team in die mehrjährige Entwicklungsarbeit die 3D-Expertise ein, das gelbe das Safety-Know-how.

Neue Herangehensweise – neue Denkweise

Für eine solche Aufgabe war auch eine Veränderung des Mindsets bei allen Beteiligten erforderlich. Denn bestimmte Freiheitsgrade, die bei der Entwicklung eines blauen Automatisierungssensors gegeben sind, waren durch den Prozess bei der sicheren Variante eingeschränkt: „Ja, wir mussten im Sinne unserer gewünschten Kundenzentrierung auch mal alle gemeinsam eine Extrameile gehen, aber es hat sich gelohnt“, ist sich Rapp sicher. Durch die neue Herangehensweise konnten zwei Produkte relativ zeitnah auf den Markt gebracht werden, die direkt auf eine starke Nachfrage stießen.

Für die Beteiligten ist das Projekt ein gelungenes Beispiel, wie SICK in Zukunft weitere Sensorikentwicklungen angehen kann. Überhaupt die Zukunft: Die geht in der Industrie von der Automatisierung hin zur Autonomisierung und zur vollständigen Vernetzung von Maschinen und Sensoren im Sinne einer adaptiven Produktion.

Ziel ist, alle erfassten Produktionsdaten mit intelligenten Algorithmen in Echtzeit auszuwerten und Prozesse fortlaufend anpassen zu können. So soll die komplett digitalisierte und vernetzte Produktionsumgebung entstehen. „Unsere ebenfalls in Echtzeit arbeitenden Vision-Systeme sind da ein guter Baustein“, ist sich Rapp sicher. Als „Augen“ liefern die Kameras genau die hoch qualitativen 3D-Daten, die für optimale autonome Entscheidungen erforderlich sind. „Und ohne gute Augen stößt man da bekanntlich schnell an Grenzen“, ergänzt Sherman.

3D-Time-of-Flight-Technologie: Präzise 3D-Daten in Echtzeit

Unter 3D-Time-of-Flight (3D-ToF) versteht man die Messung der Laufzeit eines Lichtsignals zwischen einer Kamera und einer Zielszene gleichzeitig für jeden Bildpunkt. Sobald die Ankunftszeit oder die Phasenverschiebung des reflektierten Lichts bekannt ist, kann die Entfernung zum Objekt bestimmt und ein Abstandsbild erstellt werden. Die Visionary-T Mini von SICK liefert so beispielsweise mehr als 6,5 Millionen 3D-Entfernungsdaten pro Sekunde – und das sehr stabil. Das auch als 3D-Snapshot-Technologie bezeichnete Verfahren kann mit der Lichtlaufzeitmessung sogar unbewegte Szenen dreidimensional erfassen, ohne dass Aktoren oder mechanische Teile in der Kamera bewegt werden müssen.

Momentum: Magazin zum Geschäftsbericht 2022

Es lohnt sich, Erfolgsgeschichten auf das Momentum zu hinterfragen. Was war der Augenblick oder Anlass, der alles ins Rollen und zum Erfolg brachte?

Die Beiträge unseres Magazins zeigen, dass Momentum kein Zufall und mehr als die Verkettung glücklicher Umstände ist. Momentum entsteht aus Intuition, Inspiration, Erfahrung, Kompetenz und Leidenschaft.

Lesen Sie auch die anderen Artikel aus unserem Geschäftsbericht 2022

Mehr Artikel aus dem SICK Sensor Blog

Momentum

Ich möchte am Laufenden bleiben und regelmäßig über neue Stories informiert werden!